Last month I was invited along to the inaugural EyeEm Festival & Awards. Among other things, I was on a panel on "The Camera of Tomorrow". I do quite a bit of panel-ing (is that a word?) but I'm unable to shake this particular one from my mind, because at some point, the discussion wandered into a topic that I haven't given much thought so far: Who actually consumes photography?

Are photos for humans or machines?

One of the big and scary ideas that came up was that the average photograph - or frames in a video, as may be the case - are no longer primarily for human consumption. As computers and image recognition becomes better, we now live in a world where even though if your photo is seen by 50 of your friends on Facebook, that very same photo will be seen by hundreds, if not thousands, of robots. Image recognition bots, facial recognition bots, localisation calculation bots, Google Images, scientific and statistical analysis bots... Who knows.

If you think about it: say you are a scientist who is trying to map the increase or decrease of water in a particular lake. You could install expensive equipment - but where would you get historical data? Well, if that lake happens to be a popular holiday destination that people tend to share photos of, you could actually do image-driven research: Photos taken on smartphones are tagged in the metadata by time and GPS location; Scrape the internet for photos taken in that particular location, then use image recognition software to estimate the water level in the lake. Science fiction? Nope - perfectly possible, and projects like this are already in action at universities and in commercial settings all around the world.

That's a relatively benign example: What about the data we put out there ourselves? That the trend of taking selfies is an incredibly powerful tool: By posting photos to Twitter, and describing (or even hashtagging) the photo as a 'selfie', it means that over the years, computers can start to map your ageing process, and potentially learn about the way that human faces tend to age. Add a layer of geo-location on top, and perhaps scientists will find that humans living near power stations have, on average, slower hair growth (just to pick an example). To a data scientist / statistician, the possibilities are absolutely mind-boggling.

Of course, there are less salient uses too: By posting selfies online, you're feeding an incredibly powerful facial recognition opportunity: Facial recognition based on a single photograph can be incredibly difficult (think about it: You're just a new pair of glasses, a baseball cap, or a beard away from looking very different from a single photograph). If, instead, one were to collect all the photos I've ever posted of myself online, you've got a huge amount of data: What I look like in different lighting situations, with a hat, with a beard, with glasses on, in the morning, in the evening, smiling, angry, moody... All of this is data that could be used to create a mathematical formula for what "I" look like. Feed that into a network of CCTV cameras (they say that you can travel from anywhere to anywhere else in London without ever stepping out of reach of a CCTV camera), and it's possible to track my every movement through my home city. Scary? Perhaps - but against that lens, it becomes all the much clearer that the chief consumer of imagery is, indeed, computers - and by sharing photos of ourselves, we're making it all a lot easier for whoever wants to track us around.

Is there anything machines can't do better than humans?

Ok, so we're being ruled by robotic overlords; what else is new... Is there anything humans can actually do better?

Of course, you can teach a computer to take photographs - it isn't even a very big challenge. A computer could even take some highly proficient photographs, technically: Focus, white balance, depth of field, colour saturation - even triggering the camera at exactly the right point in the process of taking a photo is all describable mathematically, which means that computers are rather good at it. In fact, when you think about it: Most of us heavily rely on computers already: Exposure light meters, automatic focus - they've been the computer-powered helpers in the world of photography for years, and we wouldn't want it any other way.

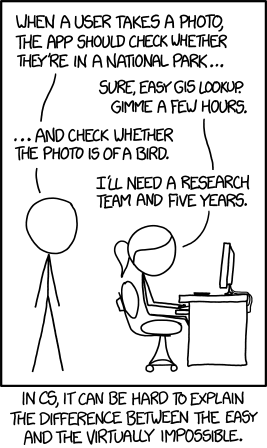

The other side of photography, however is trickier: The artistic side. This is where a recent XKCD comic hit the nail on the head:

Put simply, whereas a photo can be objectively 'bad' technically (Out of focus, motion blurring, white balance issues, exposure issues, wonky horizons, etc etc etc), deciding what makes a good photograph creatively can be very difficult to ascertain even to humans (Go on, give it a shot: See if you like / admire / understand why each of the top 10 photographs picked by Time magazine to be the best of 2013 were chosen. There is a recurring theme; more about that below).

Of course, what makes a 'good' photo is partially down to taste and cultural convention, but also down to a very difficult to answer question in general: What makes a good photograph? There are a few technologies that already exist to help people determine which photo in a burst of shots is 'best' tends to be limited to technical elements (From a set of 10 photos, pick the photo with the least camera blur, the least motion blur, and where people aren't blinking), rather than the aesthetic side of things.

But what about the story?

The other - and perhaps most important part - of photography is where machines truly fall short: Computers may one day be able to create photographs that are technically proficient and aesthetically pleasing, but the most powerful photographs are the ones that have a third layer: A story that's worth telling; a story worth listening to, and thinking about.

Every photograph that ever tugged at your heart-strings will have done so because it tells (part of) a story. In fact, the photo doesn't even have to be particularly creative or technically perfect - a slightly blurry photograph of your recently deceased grandmother could move you to tears - not because of the photograph, but because of the story it tells.

Ultimately, the story is all that matters; A technically perfect photo of a person is a photographic rarity, and may be interesting for that reason. If the lighting and setting is also great, you may be on to a good artistic photograph too. But the reason we identify with portraits is the stories they tell: Either because we (think we) know the person in the photo, or because we, as human beings, relate to something about the person in the photograph.

It may very well be the case that machines have overtaken humans as consumers of photography, but machines have a different purpose than humans: Computers see photographs as datapoints in an almost unfathomably large matrix of data. Humans see photographs as stories and memories. Maybe that's a thought worth taking with you into your next photo shoot - I certainly will.